The Ethical Dilemma of AI-Created Images: Why We Should Avoid Depicting Violence and Destruction ?

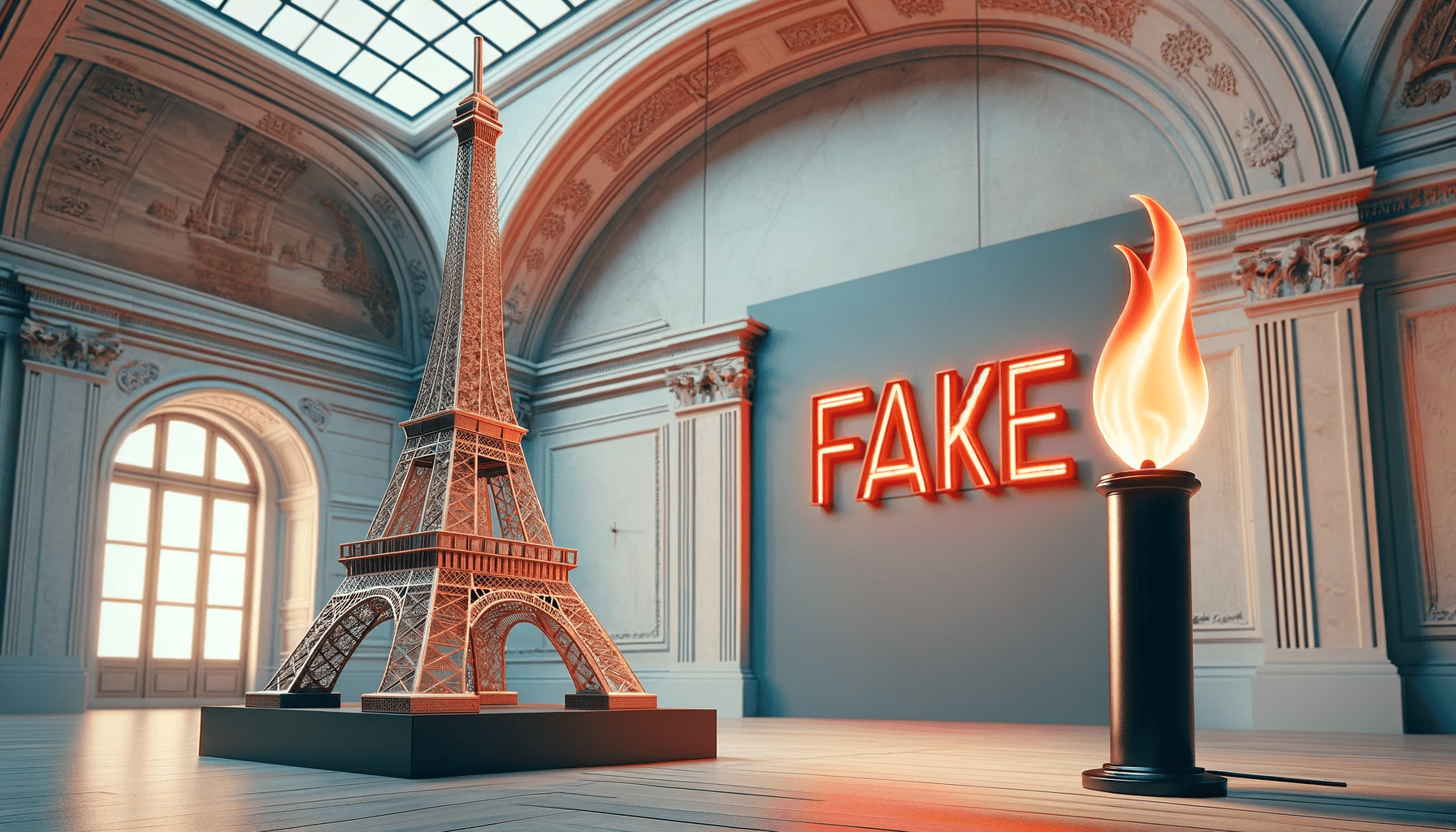

In an era where artificial intelligence (AI) can generate breathtakingly realistic images, we find ourselves at a crossroads of technological marvel and ethical quandaries. A striking example of this is the fabricated image of the Eiffel Tower catching fire in 2023, which caused a global stir when it went viral in 2024. Although this incident was a complete fabrication, it highlights a significant ethical concern: the use of AI in creating fake images depicting violence, destruction, or similarly negative themes.

Why Creating Fake Images of Violence is Problematic

- Spreading Misinformation: In the digital age, misinformation spreads faster than the truth. Fake images, like the Eiffel Tower fire, can lead to widespread panic and confusion. This not only causes unnecessary distress among the public but also undermines trust in digital media.

- Moral and Ethical Concerns: Creating and disseminating images of violence or disaster, even if fictional, can be deeply distressing. It trivializes the real suffering and trauma experienced by victims of actual disasters and conflicts.

- Impact on Mental Health: Constant exposure to violent or destructive imagery, even when known to be artificial, can have a detrimental effect on mental health. It can desensitize individuals to real-world violence and increase anxiety and fear.

- Legal and Social Ramifications: Fabricating images of disaster or violence can have legal consequences, especially if they lead to public disorder or affect stock markets and economies. Socially, it contributes to a culture of fear and mistrust.

The Eiffel Tower Fire Hoax: A Case Study

The incident of the Eiffel Tower supposedly catching fire in 2023 is a prime example of the harm that AI-generated fake images can do. The image, though technically impressive, caused unnecessary alarm and was widely shared across social media platforms, leading to a global misconception. It took considerable effort from fact-checkers and authorities to debunk the hoax, illustrating the resources and time wasted in correcting such misinformation.

What Can Be Done?

- Regulation and Standards: There is a need for stricter regulations and ethical standards governing the use of AI in creating images, especially those depicting sensitive subjects like violence or disaster.

- Public Awareness: Educating the public about the capabilities and potential misuse of AI in generating fake imagery is crucial. Increased awareness can lead to more skepticism and critical thinking when encountering such images.

- Technological Solutions: Developing AI tools that can detect and flag AI-generated fake images can help in mitigating the spread of such content.

- Ethical AI Development: Encouraging a culture of ethical AI development, where creators are mindful of the societal impact of their creations, is essential.

While AI offers incredible opportunities for creativity and innovation, it is our collective responsibility to ensure that this technology is used ethically and responsibly. The case of the Eiffel Tower fire hoax serves as a reminder of the potential consequences of using AI to create and disseminate fake images of violence and destruction. As we move forward, let us embrace the benefits of AI while being acutely aware of and actively addressing its ethical implications.