Table Of Content

Welcome! You’re about to get acquainted with Meta Llama 3, an advanced machine learning model developed by Meta. This page is your go-to resource for understanding what Meta Llama 3 is all about, including its capabilities, advantages, limitations, and guidance on how to use it effectively. Let’s jump right in!

What is Meta Llama 3?

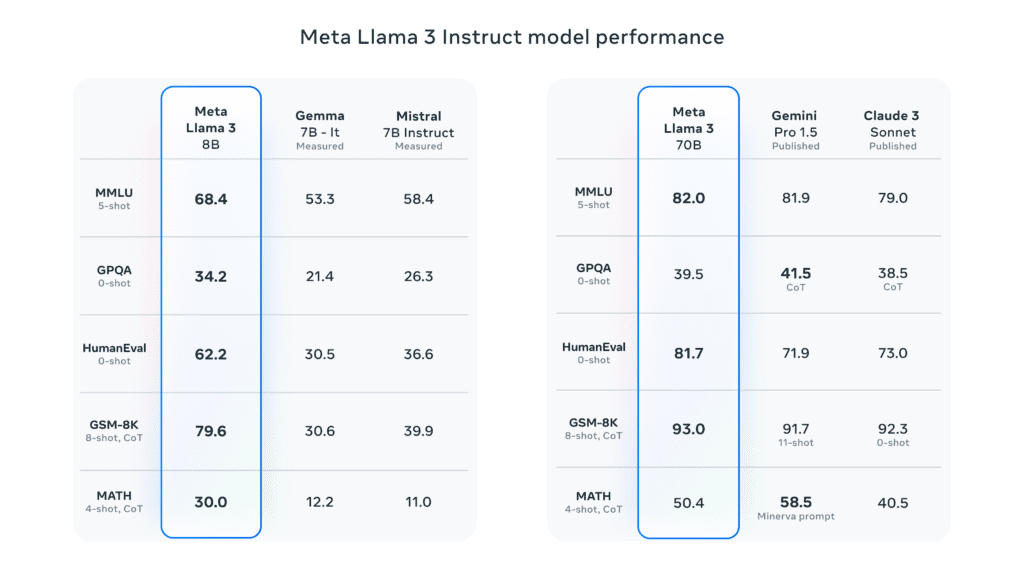

Meta Llama 3 is the latest iteration of our open-source large language model (LLM) of Llama machine learning model series. Whether you’re a developer or an innovator, this model is designed to assist you in a multitude of tasks, from coding to content creation. Available in 8B and 70B parameter versions, Meta Llama 3 sets new benchmarks in AI performance.

Meta Llama 3 is a state-of-the-art machine learning framework designed to enhance how artificial intelligence processes and generates data. If you’re looking to integrate AI into your projects, this tool could be a great fit.

Performance and Capabilities

Meta Llama 3 shines in its ability to handle large datasets with precision. It’s engineered to improve data interpretation, making it a reliable option for complex analytical tasks. Whether you’re working on natural language processing or image recognition, Meta Llama 3 delivers consistent, high-quality results.

With rigorous improvements over its predecessor, Meta Llama 3 exhibits exceptional capabilities, particularly in reasoning and code generation. Users will notice a significant enhancement in model responses, thanks to advanced pretraining and fine-tuning techniques. Our evaluations against major benchmarks confirm that Meta Llama 3 is nothing short of the best in its class.

Pros and Cons

Pros:

- High Accuracy: Exceptional at delivering precise outcomes.

- Scalability: Adapts well to various sizes of data challenges.

- User-friendly: Straightforward for developers to implement.

Cons:

- Resource Intensive: Requires significant computing power.

- Learning Curve: Might be challenging for beginners.

How to Use Meta Llama 3

This opensource AI model can be accessed in at least three different ways.

Deploying locally Llama 3 Via GitHub

Getting started with Meta Llama 3 involves a few key actions:

- Access the framework via the external official link to Meta Introductory Blog post we provided in this page.

- Follow the provided documentation to set up your environment which includes downloading the Llama 3 models from github.

- Begin integrating the model into your projects, utilizing the extensive examples and tutorials available.

Integration of Llama 3 within cloud platforms

In alternative, once it becomes available on various clouds:

- Choose your platform from the likes of AWS, Google Cloud, or Microsoft Azure.

- Access Meta Llama 3 through the platform’s marketplace.

- Integrate the model into your projects or start experimenting directly in the platform’s environment.

Meta Llama 3 not only supports your current AI initiatives but also sets the stage for future advancements. By using this model, you ensure your projects stay at the forefront of technological progress.

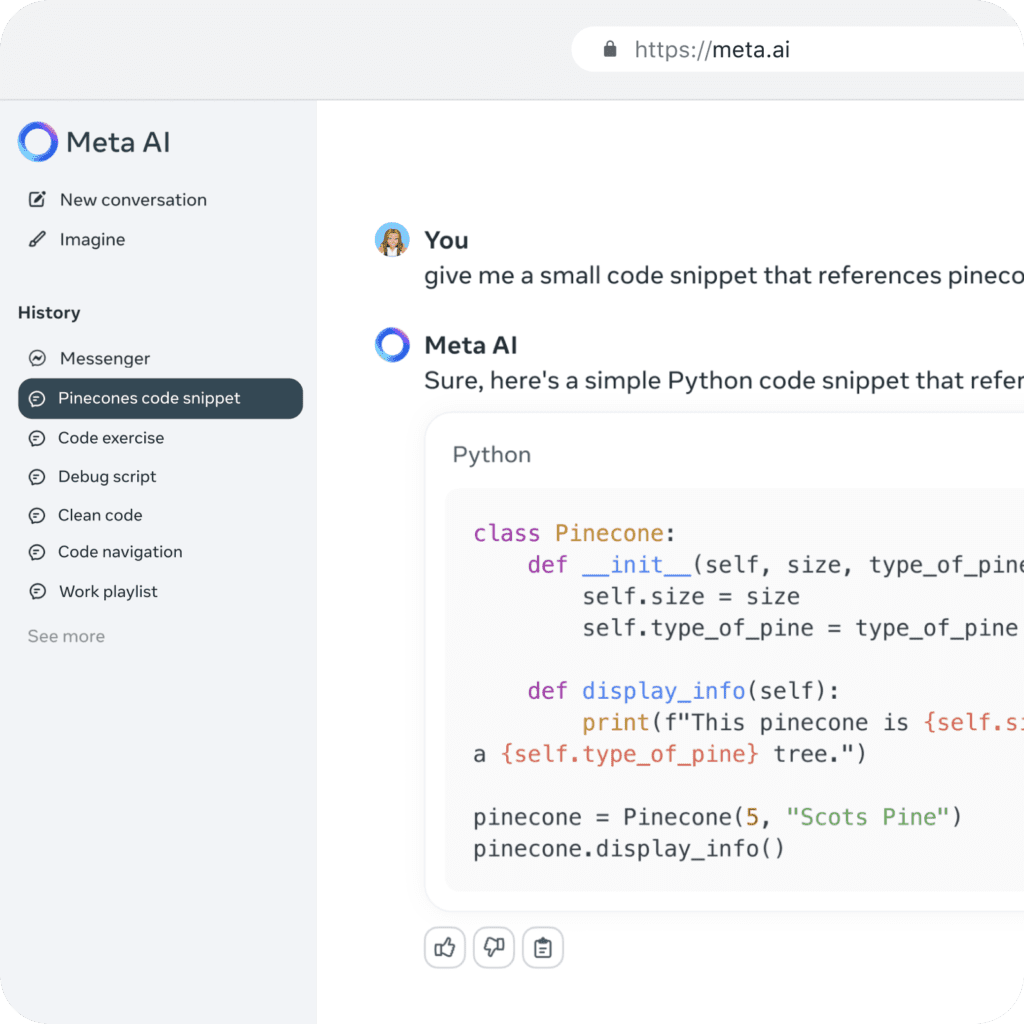

Integration of Llama 3 within Meta AI

They have integrated Llama 3 into Meta AI, their intelligent assistant, expanding the ways in which people can accomplish tasks, create, and connect using Meta AI. One can see the performance of Llama 3 firsthand by utilizing Meta AI for coding tasks and problem-solving.

Whether developing agents or other AI-powered applications, Llama 3 in both 8B and 70B models offers the capabilities and flexibility needed to develop their ideas.

Ready to Start?

Now that you know what Meta Llama 3 can do for you, why not give it a try? Harness the capabilities of this advanced tool to push your projects beyond traditional boundaries. Remember, Meta Llama 3 is more than just a tool—it’s your partner in navigating the complexities of artificial intelligence.

Leave a Reply

You must be logged in to post a comment.