If you’re keen to gauge the speed and performance of your GPT AI applications or services, AI speed tests can be your go-to tool. These tests aren’t just for tech wizards; they’re accessible to anyone curious about the efficiency of their digital solutions. By conducting these speed tests, you get to examine how well your AI behaves under different conditions, and pinpoint areas for improvement.

What is an AI Speed Test?

An AI speed test, especially when referring to models like ChatGPT, measures the efficiency of a model by calculating how many tokens it can generate per second. This metric is crucial for understanding the operational performance of AI deployments. In practical terms, this means evaluating how quickly a model like ChatGPT can process and respond to input, which directly impacts user experience.

For instance, when you interact with ChatGPT, each piece of text you input is split into “tokens” (words or parts of words). The speed test then assesses how rapidly the model can process these tokens to generate a response. This is particularly important in scenarios where response time is critical, such as in real-time applications or when scaling solutions to accommodate more users.

The significance of such tests lies in their ability to highlight performance bottlenecks and guide optimizations, ensuring that the AI model remains efficient under various loads. This helps developers and engineers fine-tune the system, ensuring optimal performance and resource utilization, thus enhancing overall efficiency and user satisfaction.

How to Use an AI Speed Test ?

To use an AI speed test effectively, especially for evaluating the performance of chatbots like ChatGPT, you need to develop a testing framework that can simulate real-world usage scenarios. One efficient method is to use a tool like Locust, which is an open-source load testing tool. Here’s a step-by-step guide on how to set up and use this type of AI speed test:

Step 1: Define Your Testing Objectives

First, determine what aspects of the AI’s performance you are most interested in testing. This could include response time, the rate of token generation per second, and how the system scales under heavy loads.

Step 2: Create a Locust Script

Write a free Locust.io Stress Test script that defines the behavior of the simulated users. In this context, each user will represent a chatbot instance making requests to your AI model. The script should specify how each chatbot sends requests and how often, mimicking typical user interactions.

Step 3: Set Up Multiple Chatbots in Parallel

Configure your Locust script to run multiple chatbots in parallel. This is crucial for testing how well your AI system performs under concurrent loads. You’ll need to ensure that each virtual user (chatbot) behaves as independently as possible to accurately simulate multiple users interacting with your AI at the same time.

Step 4: Run the Test

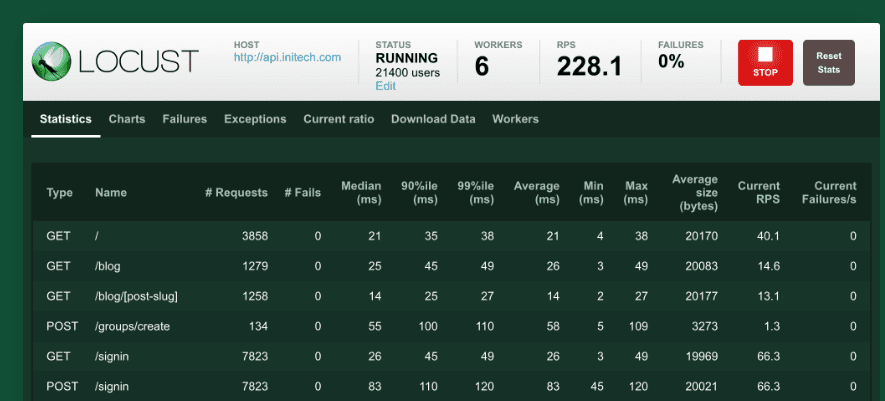

Execute the Locust test. Locust will simulate the specified number of users sending requests to your AI model, and it will collect data on how many tokens each instance can process per second.

Step 5: Summarize and Analyze the Results

After the test, Locust will provide a report that includes metrics such as the number of requests per second, response times, and number of failures, if any. To measure the AI’s speed, you’ll sum up all the tokens generated by the chatbots during the test period. This total gives you a concrete measure of the model’s token generation capacity per second under the simulated load conditions.

Step 6: Optimize Based on Findings

Use the insights gained from the test to identify bottlenecks or performance issues. Depending on the outcomes, you may need to make adjustments to your AI configuration or the underlying infrastructure to improve performance.

This testing approach not only helps ensure that your AI model deployment can handle real-world use efficiently but also aids in optimizing resource allocation to maintain consistent performance as demand fluctuates.

Why Regular Testing is Essential

Regular performance checks are essential—they help you maintain an optimal user experience as your AI evolves. With constant updates and changes, regular testing ensures that performance doesn’t degrade over time. It also keeps you ahead of the curve in maintaining the efficiency of your AI, crucial for user retention and satisfaction. To conduct an AI speed test efficiently, particularly for AI models like ChatGPT, it’s essential to simulate real-world usage scenarios. Using a tool like Locust, you can script and run multiple chatbots in parallel to mimic user interactions and measure the AI’s token generation rate per second. This approach helps you evaluate the AI’s response time and performance under concurrent loads, pinpointing areas for improvement to enhance user experience and operational efficiency. By regularly testing and optimizing based on these findings, you can ensure your AI maintains peak performance, accommodating growth and increased user demand seamlessly.