In the rapidly evolving landscape of artificial intelligence (AI), a new contender has emerged to challenge the status quo. Groq AI. Renowned for its superior speed and efficiency, Groq has quickly become a topic of interest among tech enthusiasts and professionals alike.

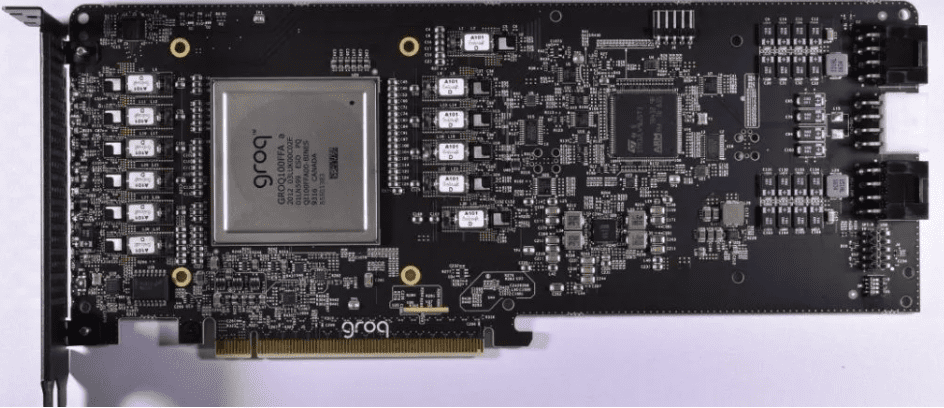

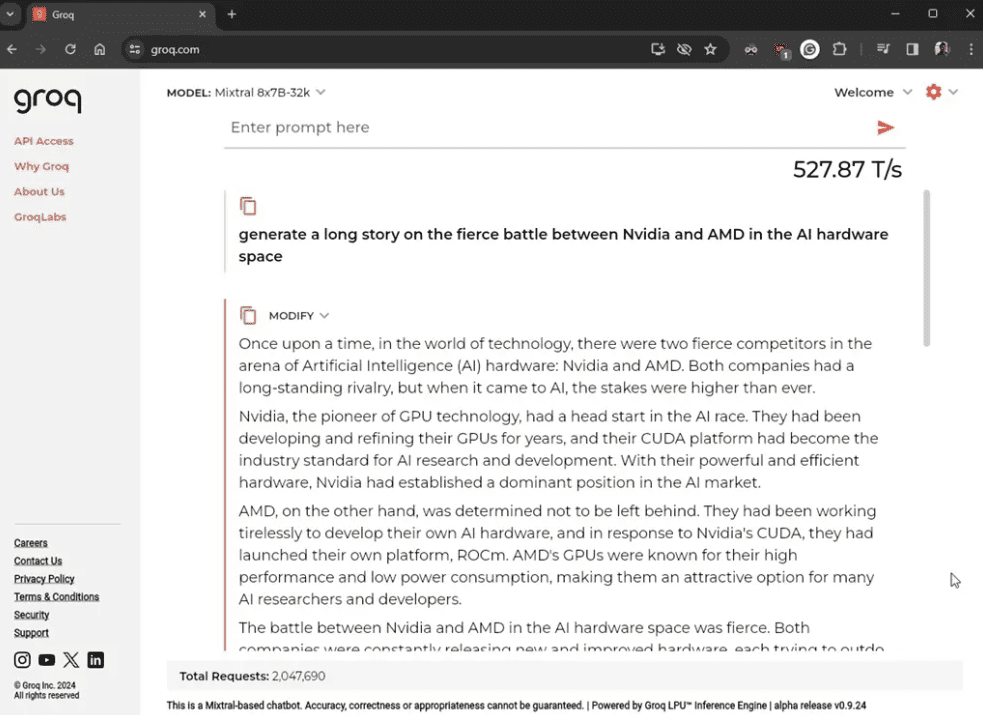

Groq’s chip boasts exceptional speed thanks to its innovative architecture, overcoming typical memory constraints of GPUs. This optimized processing eliminates traditional bottlenecks, boosting performance significantly. Research indicates even a 100-millisecond speed improvement can spike user engagement by 8% on desktops and 34% on mobile devices. With a capability of processing 500 tokens per second, Groq’s chip redefines speed.

The new AI chip from Groq, introduced by Jonathan Ross at the World Government Summit in Dubai, is described as the world’s first Language Processing Unit (LPU). Jonathan Ross left Google to launch next-generation semiconductor startup Groq in 2016.

Grog Supports Running Standard Machine learning Frameworks

Groq supports standard machine learning (ML) frameworks such as PyTorch, TensorFlow, and ONNX for inference. Groq does not currently support ML training with the LPU Inference Engine.

For custom development, the GroqWare™ suite, including Groq Compiler, offers a push-button experience to get models up and running quickly. For optimizing workloads, we offer the ability to hand code to the Groq architecture and fine-grained control of any GroqChip™ processor, enabling customers the ability to develop custom applications and maximize their performanc

Pros

- Exceptional Speed and Efficiency: Processing requests faster than other leading AI language interfaces.

- Custom Hardware Integration: Can handle a wide range of tasks more efficiently than models relying on general-purpose hardware.

- Scalability: Groq AI’s scalable architecture meets the demands of businesses of all sizes without sacrificing performance.

- Energy Efficiency: Optimized for energy conservation, making it a more sustainable option for eco-conscious organizations.

Cons

- Cost: Requires a higher initial investment due to its custom hardware, compared to AI interfaces using existing cloud infrastructure.

- Accessibility: Specialized hardware may limit accessibility for smaller companies or individual developers due to resource constraints.

- Compatibility Issues: As Groq AI operates on a unique chipset, integrating it with existing systems and software stacks might present challenges.

Pricing

Groq AI’s pricing structure is not universally fixed and tends to vary based on the scale of deployment and specific use cases.

They currently offer a 10 day free trial on they API to run LLM applications in a token-based pricing model such as LLAMA.

Interested parties are typically encouraged to contact Groq directly for a tailored quote.

Use Cases

- Real-time Data Processing: Groq AI’s speed makes it exceptionally suited for applications requiring immediate analysis and feedback, such as financial trading algorithms or real-time monitoring systems.

- High-Volume Content Generation: Its efficiency enables rapid generation of large volumes of content, benefiting sectors like media, advertising, and e-commerce.

- Complex Computational Tasks: Given its custom hardware, Groq AI excels in complex problem-solving, making it valuable for research and development in fields like pharmaceuticals, automotive design, and environmental modeling.

Groq’s unique approach to data processing has the potential to revolutionize the computing landscape. By providing a highly efficient and scalable solution for data-intensive tasks, Groq’s technology has numerous applications across various industries. As data processing demands continue to grow, solutions like Groq’s GPU will become increasingly important in enabling organizations to process and analyze data quickly and efficiently.

Leave a Reply

You must be logged in to post a comment.